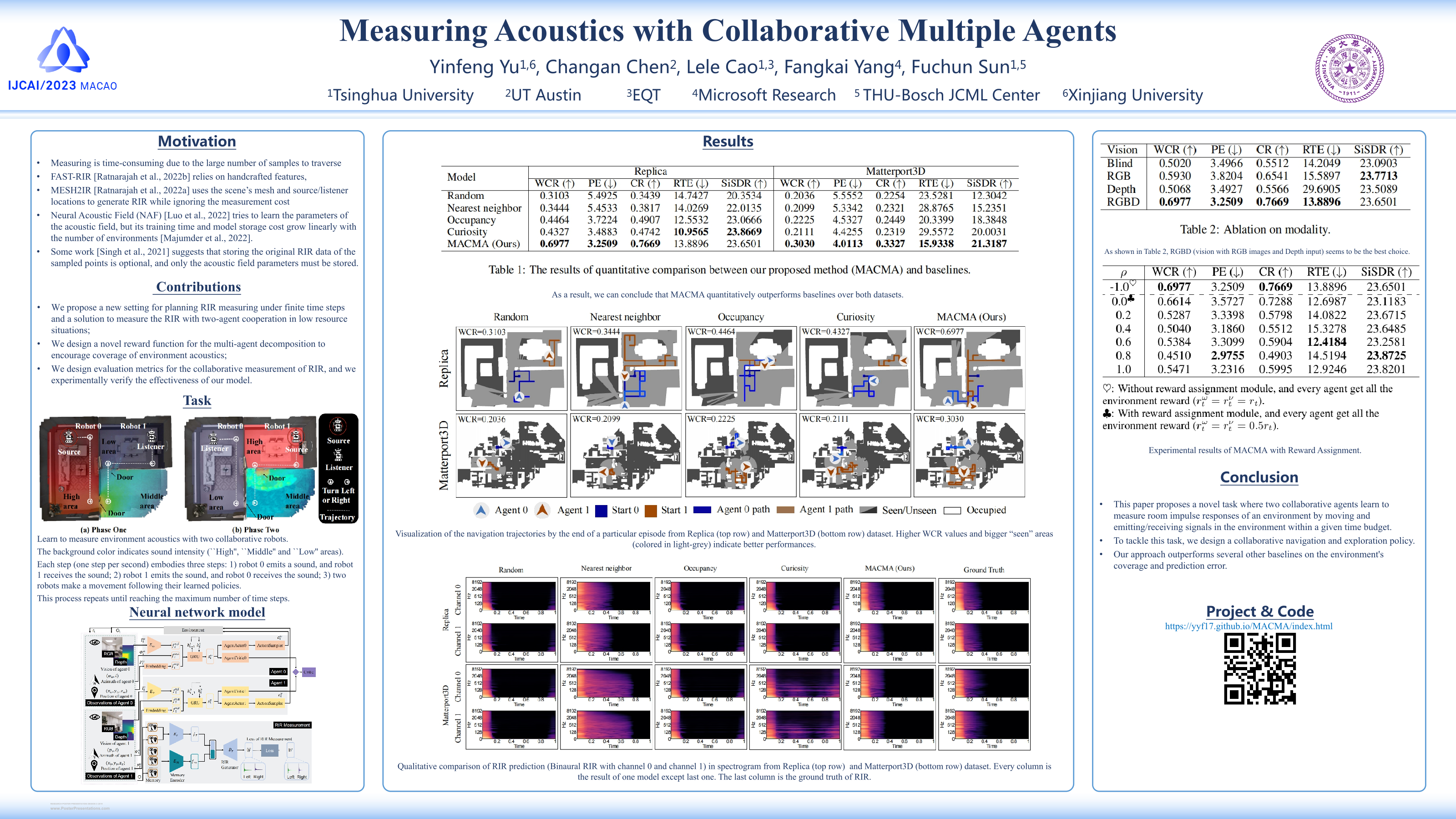

Measuring Acoustics with Collaborative Multiple Agents

Tsinghua University (THU)

International Joint Conference on Artificial Intelligence (IJCAI), 2023

Paper | Code | Slides | Bibtex | Paper(main+appendix)

Abstract

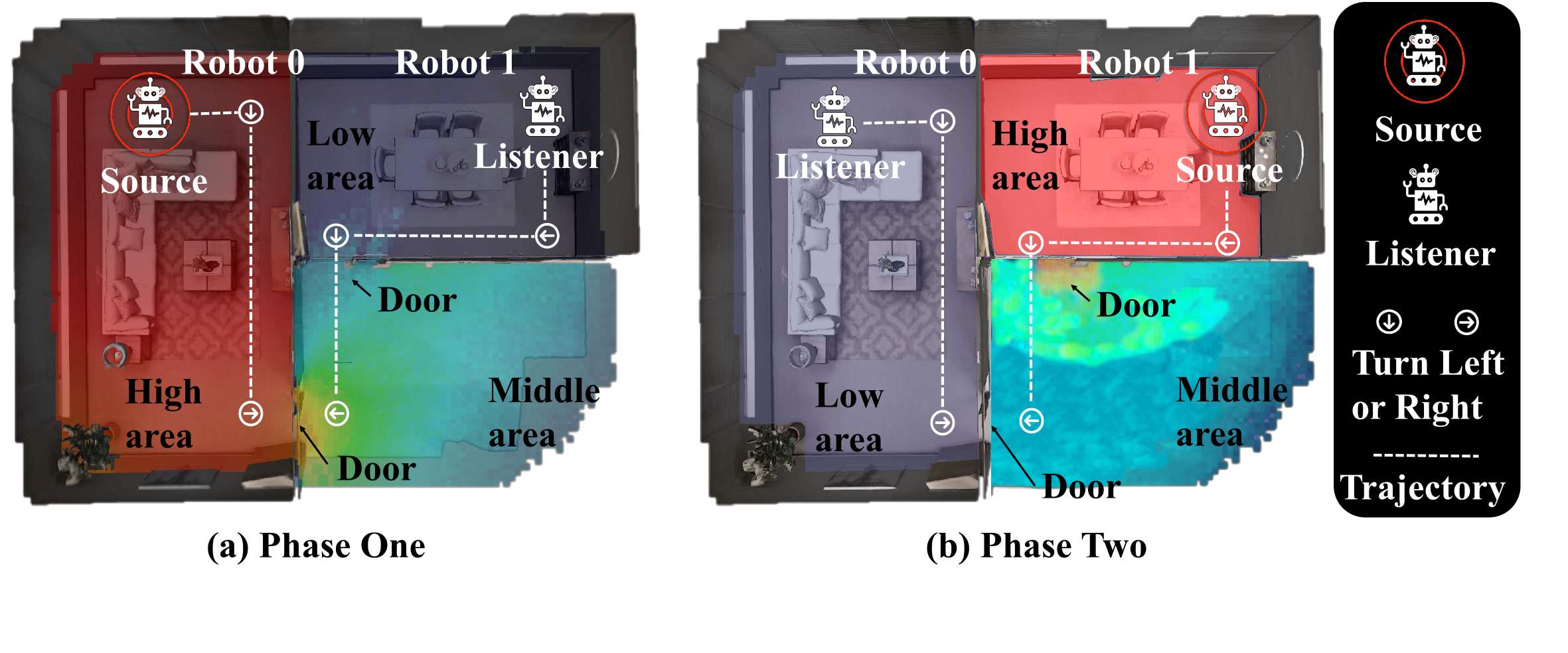

As humans, we hear sound every second of our life. The sound we hear is affected mainly by the acoustics of the environment surrounding us. For example, a spacious hall leads to more reverberation. Room Impulse Responses (RIR) are commonly used to characterize environment acoustics as a function of the scene geometry, materials, and source/receiver locations. Traditionally, RIRs are measured by setting up a loudspeaker and microphone in the environment for each pair of source/receiver locations, which is time-consuming and inefficient. We propose to let two robots measure the environment's acoustics by actively moving and emitting/receiving environmental signals. We also design a collaborative multi-agent policy where these two robots are trained to explore the environment's acoustics while being rewarded for accurate and widely explored predictions. We show that the robots learn to collaborate and move to explore environment acoustics while minimizing the prediction error. To our best knowledge, this is the first task where robots learn to measure environmental acoustics instead of humans. Project: https://yyf17.github.io/MACMA.

Materials

Presentation

Citation

@inproceedings{YinfengIJCAI2023MACMA,

author = {

Yinfeng Yu and

Changan Chen and

Lele Cao and

Fangkai Yang and

Wenbing Huang and

Fuchun Sun

},

title = {Measuring Acoustics with Collaborative Multiple Agents},

booktitle = {The 32nd International Joint Conference on Artificial Intelligence, {IJCAI}

2023, Macao, 19th-25th August 2023},

year = {2023}

}

Acknowledgement

We thanks the insightful comments from the reviewers of IJCAI 2023.

The following projects jointly supported this work:

the Sino-German Collaborative Research Project {\it Crossmodal Learning} with identification number \verb|NSFC62061136001/DFG SFB/TRR169|;

the National Natural Science Foundation of China (No.61961039);

the National Key R\&D Program of China (No.2022ZD0115803);

the Xinjiang Natural Science Foundation (No.2022D01C432, No.2022D01C58, No.2020D01C026 and No.2015211C288);

this work is also partially funded by THU-Bosch JCML center.