Echo-Enhanced Embodied Visual Navigation

Tsinghua University (THU)

Neural Computation (2023)

Paper(MIT) | Paper | Bibtex

Abstract

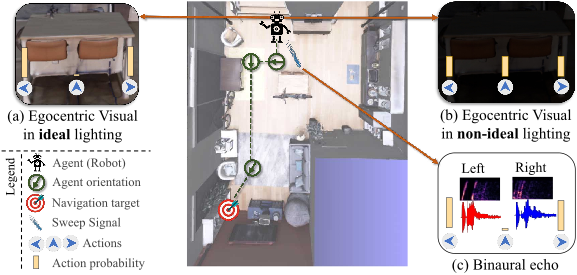

Visual navigation involves a movable robotic agent striving to reach a point goal (target location) using vision sensory input. While navigation with ideal visibility has seen plenty of success, it becomes challenging in sub-optimal visual conditions like poor illumination, where the traditional approaches suffer from severe performance degra- dation. We propose E3VN (echo enhanced embodied visual navigation) to effectively perceive the surroundings even under poor visibility to mitigate this problem. This is made possible by adopting an echoer that actively perceives the environment via audi- tory signals. E3VN models the robot agent as playing a cooperative Markov game with that echoer. The action policies of robot and echoer are jointly optimized to maximize the reward in a two-stream actor-critic architecture. During optimization, the reward is also adaptively decomposed into the robot and echoer parts. Our experiments and abla- tion studies show that E3VN is consistently effective and robust in point goal navigation tasks, especially under non-ideal visibility.

Citation

@article{10.1162/neco_a_01579,

author = {Yu, Yinfeng and Cao, Lele and Sun, Fuchun and Yang, Chao and Lai, Huicheng and Huang, Wenbing},

title = {Echo-Enhanced Embodied Visual Navigation},

journal = {Neural Computation},

volume = {35},

number = {5},

pages = {958-976},

year = {2023},

month = {04},

issn = {0899-7667},

doi = {10.1162/neco_a_01579},

url = {https://doi.org/10.1162/neco\_a\_01579},

eprint = {https://direct.mit.edu/neco/article-pdf/35/5/958/2079357/neco\_a\_01579.pdf},

}

Acknowledgement

This work is funded by Sino-German Collaborative Research Project Crossmodal Learning with identification number NSFC62061136001/DFG SFB/TRR169.