Sound Adversarial Audio-Visual Navigation

Tsinghua University (THU)

International Conference on Learning Representations (ICLR), 2022

Abstract

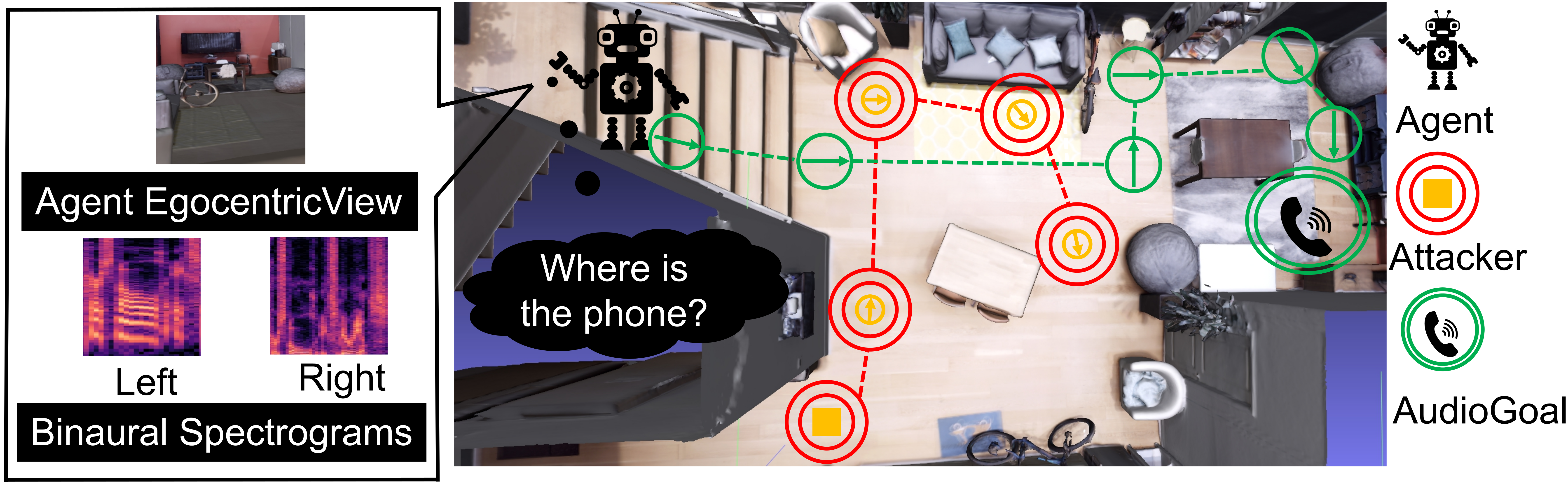

Audio-visual navigation task requires an agent to find a sound source in a realistic, unmapped 3D environment by utilizing egocentric audio-visual observations. Existing audio-visual navigation works assume a clean environment that solely contains the target sound, which, however, would not be suitable in most real-world applications due to the unexpected sound noise or intentional interference. In this work, we design an acoustically complex environment in which, besides the target sound, there exists a sound attacker playing a zero-sum game with the agent. More specifically, the attacker can move and change the volume and category of the sound to make the agent suffer from finding the sounding object while the agent tries to dodge the attack and navigate to the goal under the intervention. Under certain constraints to the attacker, we can improve the robustness of the agent towards unexpected sound attacks in audio-visual navigation. For better convergence, we develop a joint training mechanism by employing the property of a centralized critic with decentralized actors. Experiments on two real-world 3D scan datasets, Replica, and Matterport3D, verify the effectiveness and the robustness of the agent trained under our designed environment when transferred to the clean environment or the one containing sound attackers with random policy. Project: https://yyf17.github.io/SAAVN.

Materials

Presentation

Citation

@inproceedings{YinfengICLR2022saavn,

author = {Yinfeng Yu and

Wenbing Huang and

Fuchun Sun and

Changan Chen and

Yikai Wang and

Xiaohong Liu},

title = {Sound Adversarial Audio-Visual Navigation},

booktitle = {The Tenth International Conference on Learning Representations, {ICLR}

2022, Virtual Event, April 25-29, 2022},

publisher = {OpenReview.net},

year = {2022},

url = {https://openreview.net/forum?id=NkZq4OEYN-},

timestamp = {Thu, 18 Aug 2022 18:42:35 +0200},

biburl = {https://dblp.org/rec/conf/iclr/Yu00C0L22.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

Acknowledgement

The following projects jointly supported this work:

the Sino-German Collaborative Research Project Crossmodal Learning (NSFC 62061136001/DFG TRR169);

Beijing Science and Technology Plan Project (No.Z191100008019008);

the National Natural Science Foundation of China (No.62006137);

Natural Science Project of Scientific Research Plan of Colleges and Universities in Xinjiang (No.XJEDU2021Y003).